Introduction

We have all heard of the possibility of Artificial Intelligence (AI) based machines making humans redundant when it comes to performing dangerous, laborious, mundane or precision-requiring tasks. We have also read terms like deep learning and neural networks, sometimes being used interchangeably with AI without much clarity on the meaning of these words. It may be time to look a little deeper into what these terms mean and what impact they could have in the diagnostics world.

“Can machines think?”

– by Alan Turing in 1950

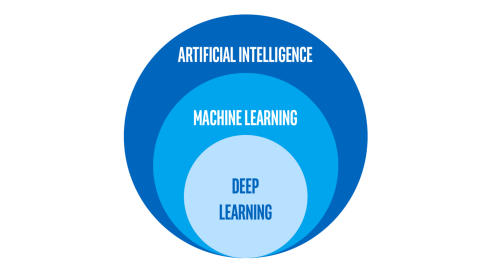

Artificial Intelligence (AI) can be defined as an attempt by machines to make decisions as a human would by ways of mimicking.

Machine Learning (ML) is a subset of AI where using statistical methods, the results are improved upon from past experiences.

Deep learning (DL) is a subset of ML where the statistical model is such that it functionally mimics the anatomical human brain.

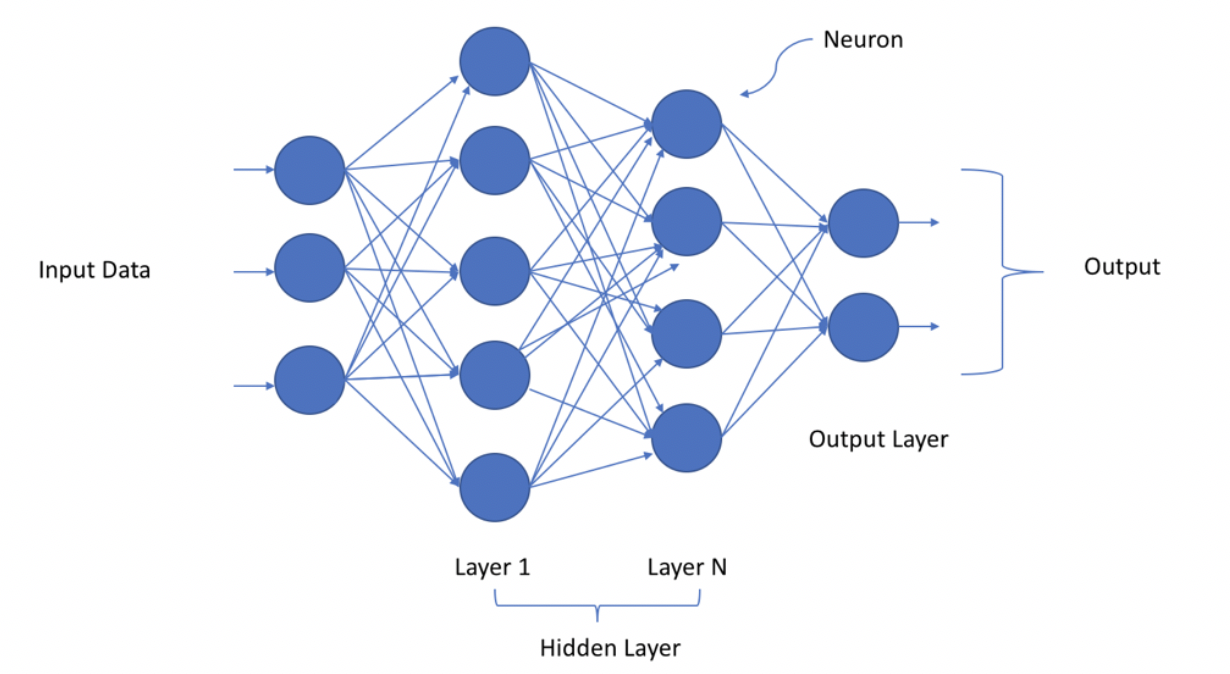

A model here can be visualized of a black box with an input pipe and an output pipe and the insides of a black box are neural networks just like in a human brain but it’s represented in a complex mathematical formula. Weights and biases are a set of numbers inside each neuron. Models primarily may have the same structure, what makes it unique and differentiates between a trained and untrained model is its weights and biases. The weights and biases define the property of a neuron and dictates what the output with respect to the input.

The ground truth is the known output for a particular input and the output from the model is known as predicted output. The output/ accuracy/performance of a DL model can be predicted empirically by running multiple input’s through the model whose output is already known and comparing the output from the model with the known output data.

A good analogy is to visualize someone learning to ride a bicycle. The person’s input is the feeling of balance and the visual input is of staying on the path. The person will find it difficult to find the balance and move ahead on the path at the same time. The human brain tries multiple muscle combinations as input and after many tries finds the right way to balance and move ahead at once. This learning process in machine learning is called training a modal and every attempt to balance and keep moving is called a cycle of training.

From Input to Output of Deep Learning models

The very first step to set up a working deep learning model is to train the model. The model needs to be fed data which in our case will be an image and the ground-truth which could be a label indicating diagnosis by a real pathologist.

The input goes through a hidden layer in each cycle where all input data points are mathematically computed with every layer’s weights and biases. The input then reaches the output layer representing the estimated output. Recapitulating the ground-truth, the model calculates errors and changes the neural connection’s strengths, i.e. the weights and biases.

After several such cycles, the connection strengths or so to say, the weight and biases of the neural network are modified to ensure the results stand true to the ground-truth. The training achieves its objective if the model learns to churn out the correct output even with new data input.

Diagnosis with Deep Learning

Deep learning models accumulate the data from all the doctors who contribute to annotating the samples for training the model. The recent AI advancements with deep learning in pathology are of great importance and deserve appreciation.

The need for doctors with vast experience sets a precedent for a hyper-vigilant and prompt model. Cases that require evaluating tons of data to form a diagnosis and can still result in a very low agreement in diagnosis, some forms of breast and prostate cancer, for example, are time-consuming.

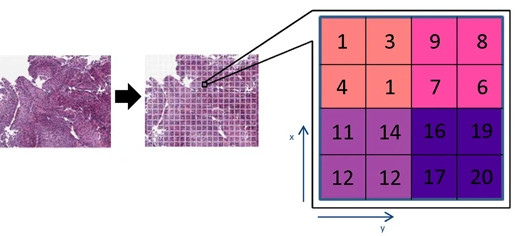

Consider this, a patient with a diagnosis need may have multiple slides. Going through each slide means looking through thousands of 10 MP photos, where each square pixel could contain information that would affect the diagnosis. It is time consuming.

An added dimension to having experience is the limiting aspect of the human brain. While pathologists with years of experience under their belt will have a longer list of decisive markers, they may not have had access to different kinds of samples A pathologist with more than a decade’s experience can still only reference samples they have observed during their career. Their knowledge can not be transferred as raw data from one pathologist to another.

Whereas with trained deep learning models, all it takes is copying and re-training the model with another set of samples and the upgraded model shared within systems. What deep learning essentially does is, it sifts through extensive data to locate/predict a section of the image with the best probability to have information on the diagnosis.

Recent technical advancements

With data being recorded and transferred in a digital format in radiology, diagnosis using AI was comparatively low-hanging fruit for radiology compared to pathology. There are several products in the market like Qure.ai that examine X-rays, MRIs, and CT scans, and identify patients with diseases like tuberculosis or stroke.

What an AI model does is, it breaks the large whole slide image consisting of millions of pixels into smaller chunks and feeds it into the model which then analyzes the data to predict the presence or absence of diseases.

The pathology space is relatively new due to the fact that the digitalization of data has just started to gain momentum. Products like PathAI assists pathologists in diagnosis through whole slide scanning as image and pointing out areas of possible cancer for pathologists to review. With an aging population and diseases like cancer being positively correlated to age, it is very critical to have technologies that sift through data for doctors, and AI is the solution.

Ethics, Legality, and Regulations

Everything about AI paints a picture of a rapidly growing technological revolution, everything except ethics around it the use and implementation of AI.

Ethics needs a lot of navigation to do, social acceptance, and risk/liability being one of the most talked-about aspects. Human error has been accounted for in the current systems in use, and the checks and balances that we use for human interaction may not be suitable for decisions made by machines.

Intuitively we know that the standards we set for a machine should be high. For example, Tesla- a self-driving car- has been statistically shown to be safer on the road than human drivers, but this does not insulate the question of liability in rare events of a crash where there might be a technical oversight.

Similarly, in cases where a pathologist missing a diagnosis vs an AI’s miscalculation create a moral dilemma. The way towards acceptance would be to have the system accuracy be significantly higher than a pathologist’s and having a pathologist to monitor.

Regulations have always been reactive and rather slow to new technologies while inventions and changes maintain its usual fast pace. Therefore, to promote innovation the initial implementation and testing needs to be evaluated thoroughly to ensure minimal red tape which at times are an entry barrier for startups.

Conclusion:

Knowing that the effective and timely treatment of a patient pivots around giving access to good quality diagnosis. This is an exciting phase for the diagnostic industry!

We are bound to see new AI technologies, diagnosis methods emerge along with entrepreneurial activities with support from the industry to navigate through ground-breaking technology and regulatory landscape.

To know about our product or what we do,follow us on social media

https://www.facebook.com/medprimetech/

https://www.linkedin.com/company/6613262/admin/